What is a Service Mesh and Why Should you Care About It

In this article, we’ll explain what a service mesh is, why it’s needed, and lay out the service mesh startup landscape as it exists today.

Over the past two years, you may have heard of something called a “service mesh” and related open source technology such as Istio or Linkerd.

As enterprises are moving from monolithic to microservices/distributed microservices to achieve more resiliency and performance, service mesh has a key role in that kind of digital transformation.

In this article, we’ll explain what a service mesh is, why it’s needed, and lay out the service mesh startup landscape as it exists today.

First, microservices.

‘Divide and conquer’ is a strategy becoming more ubiquitous in the architectures of today’s web-scale cloud applications, such as Netflix, Airbnb, Lyft, and Spotify.

Developers are trending towards microservices architectures, which break down large, monolithic cloud applications into digestible bite-sized pieces called microservices. Each microservice is responsible for a concise, well-defined function. For example, Uber - which transitioned towards microservices architecture - may have a ‘billing microservice,’ a ‘ride scheduler microservice,’ and a ‘trip review microservice’ that all work in concert to deliver the full ‘Uber app’ seamlessly.

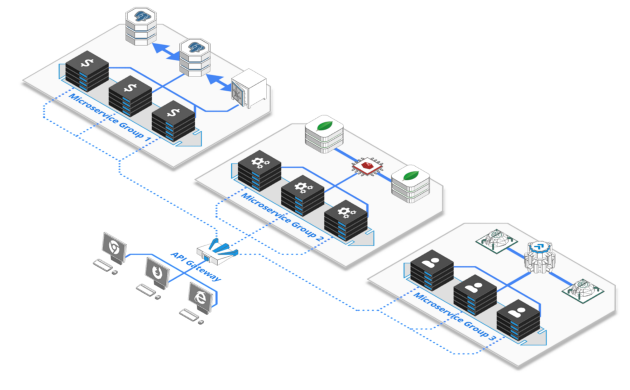

Source: DZone

By dividing up these formerly large structures, developers gain better flexibility, agility and scalability with their apps.

The Main Advantages of Microservices

- A team can take responsibility to build and maintain a specific microservice.

- Since microservices communicate over universal protocols such as REST, teams have the flexibility to choose the service’s underlying technology stack agnostic from the rest of the app.

- When necessary, certain microservices can be taken out, updated, and pushed back into production effortlessly.

- During periods of peak traffic, critical components can be scaled individually, rather than scaling the entire monolithic app which incurs unnecessary costs.

Industry surveys estimate 60% of enterprises deploy microservices in production and 90% plan to in the next five years.

Source: Camunda and Dimensional Research

It’s not all rainbows - the problems with microservice management

Despite all the benefits that microservices architecture brings to AppDev and DevOps teams, managing these distributed systems can become a nightmare.

‘Dividing and conquering’ into microservices creates an obstructing fog into application runtime simply due to the increase in number of services interconnected.

Source: AppCentrica

These are ‘death-star diagrams’ showcasing the sheer complexity of managing thousands of microservices all communicating among each other.

DevOps teams need better solutions to monitor, orchestrate, and secure these distributed networks effectively.

Enter the service mesh.

What Is a Service Mesh?

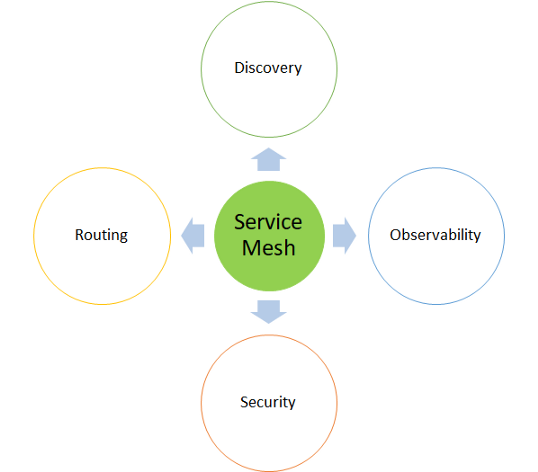

A service mesh is an abstracted layer which sits on top of the microservices and handles service-to-service communication. The mesh tracks, secures, and relays all data flow between services.

Meshes are usually implemented as lightweight network proxies deployed alongside microservices, which carries data flow in and out of the service. Service mesh network proxies are deployed alongside application code, without the application needing to be aware. A central controller (referred to as the control plane) oversees and manages all the service traffic flows between the proxies.

Service meshes abstract away how inter-process communications are handled. A service mesh allows you to separate the business logic of the application from observability, and network and security policies.

Via this implementation, DevOps instantly gain an overarching view of application runtime. The controller offers them the ability to collect performance metrics, redirect traffic, and configure access control.

What Are the Advantages of a Service Mesh?

Service mesh acts as a layer 7 overlay network that can span across on-premise, data center, and cloud deployments and provides routing, traffic management, load balancing, access control, and telemetry combined with security capabilities such as access control policies and encryption (mutual TLS).

Service Mesh allows:

- Management of all services, as and when they grow in a number of sizes.

- Provide efficient communication between them.

- Handling of network failures.

- It abstracts the mechanics of reliably delivering requests/responses between any number of services.

- Provide end-to-end performance and reliability.

- Provide a uniform, application-wide point introducing visibility and control into the application runtime.

- Provide telemetry info from proxy containers.

- DevOps gain visibility into the microservices.

- Application-wide TLS can be applied instantly.

- Controlled deployment (canary, blue/green) is possible with granular traffic control.

- Detect service failures and avoid them with circuit breakers.

Capabilities and Characteristics of Service Mesh

Characteristics of Service Mesh

- Distributed in size.

- Controlled by the control plane.

- Provides all networking such as routing, monitoring through software components (Handled by APIs).

- Decrease operational complexity.

- Provides abstraction layer at the top of Microservices, distributed across various clusters,

- Multi data-centers or Multi-Cloud.

- Decentralized.

- Almost zero latency as the proxies is lightweight.

- Proxy sidecars injected dynamically inside the deployment.

Data Plane and Control Plane

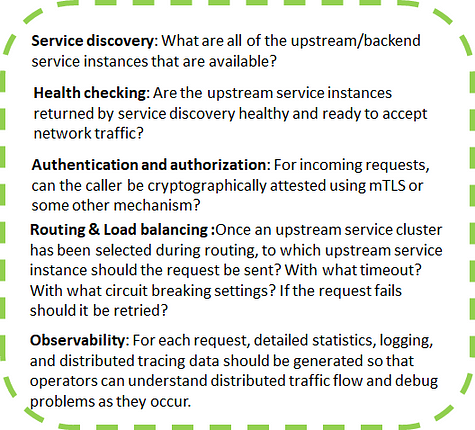

Service mesh data plane: Touches every packet/request in the system. Responsible for service discovery, health checking, routing, load balancing, authentication/authorization, and observability.

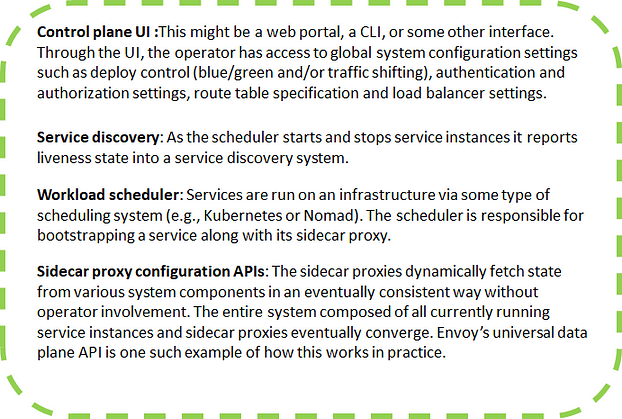

Service mesh control plane: Provides policy and configuration for all of the running data planes in the mesh. It does not touch any packets/requests in the system. The control plane turns all of the data planes into a distributed system.

Data plane in service mesh is responsible for:

Control plane in service mesh is responsible for:

Who’s in The Service Mesh Space?

Today’s service mesh technologies are spearheaded by many open-source initiatives:

- Linkerd (control and data plane)

- Istio (control plane)

- Envoy (data plane)

- Consul (control and data plane)

- SuperGloo (multimesh)

In addition to these open-source projects, the service mesh landscape is dominated by startups, large corporate players. Both of which often build on top of open-source technologies by offering either managed services or extra features geared towards enterprises. These providers aim to make the adoption process seamless for large organizations.

Source: Plug and Play Ventures

Rarely, some build their own proprietary mesh technologies, such as Avi Networks and A10 Networks.

M&A activity is gaining heat, with the recent acquisitions of Avi Networks by VMware in June 2019 for $420M and of NGINX by F5 Networks in May 2019 for $250M.

Visibility first, security second.

From our conversations with service mesh providers and their customers, we find a common sentiment that visibility and management is the foremost critical feature - it’s what enterprises are badly needing. Layer 7 security - especially for internal APIs - comes as a second priority.

Nevertheless, microservices architecture exponentially widens the internal API attack surface, and hence a number of startups have emerged aiming at securing service-to-service communications. Redberry, which had recently been in stealth, provides visibility, orchestration, and leverages AI-based anomaly detection to secure traffic between an organization’s thousands of internal APIs.

Don’t forget the on-premise stuff!

A common pattern is that lower-layered (L4) vendors are acquiring higher-layered (L7) mesh technology providers as they prepare to move into this new space.

With the complexity of today’s web-scale applications, observability need to come from L7 to provide granular insights. Moreover, large organizations are increasingly migrating towards a cloud-native culture where networking is done at Layer 7. As such, lower-layered vendors - usually on-premise solution providers, must pivot to follow their customers.

However, the transition to cloud-native does not happen overnight. Many organizations today are midway in this process, deploying parts of their applications to public clouds, parts to private clouds, and parts remaining on-premise. Moreover, these organizations tend to deploy on complex topologies of mainframes, VMs, and containers.

IBM Research estimates around 85% of enterprises embrace a multi-hybrid-cloud model. Conservative verticals, such as financial institutions, are especially hesitant to migrate to public and even private clouds, and often have the majority of their workloads on-premise.

This complex landscape means service mesh technologies will need to embrace multi-domain features that allows Layer 7 meshes to be extended across cloud providers and on-premise data centers, across mainframes, VMs, and containers. Istio, for example, offers multi-cluster configuration. The greatest challenge in multi-domain support is configuring these meshes to become compatible with Layer 2-3 networking often found in on-premise sites.

Moreover, many commercial service mesh vendors are prioritizing multi-domain, these include IBM, VMware (with its NSX Service Mesh product), Avi Networks (which was acquired by VMware), and Aspen Mesh.

SuperGloo tackles multi-domain from another perspective by providing a multi-mesh orchestrator that allows DevOps to embrace a variety of mesh technologies and manage them via a single pane of glass.

Bayware offers a revolutionary way to solve the multi-domain problem and connecting L2-3 together with L4-7 networking. Its solution leverages an agent-based approach to ‘encapsulate’ the entire network in a software-defined, easy-to-configure environment that solves all the compatibility nightmares that arises with multi-domain networking.

Big Takeaways

Organizations that have been early adopters of service mesh are cloud-first, web-scale companies that already have most if not all of their workloads containerized and on the cloud. Organizations that have not yet fully gone cloud-native tend to adopt service mesh incrementally, starting from sandbox PoCs to per business-unit or department adoption. Usually, the back-office are the first adopters.

Service mesh is undoubtedly innovative. But we believe that for the technology to truly gain widespread enterprise adoption, it needs to emerge outside the comforts of Layer 7 networking and offer compatibility with a variety of domains and legacy systems.

The bold companies innovating in this new space need to consider their commercial strategy with many of the effective, ready-out-of-the-box open-source solutions.

The DevOps Bootcamp 🚀 Newsletter

Join the newsletter to receive the latest updates in your inbox.